Domain focus and cultural neutrality

An issue with many so-called numeracy tests is that in trying to apply number skills to real life situations they obfuscate the domain. So for example the Australian NAPLAN numeracy test is not only a test of number skills. It also tests comprehension of the English language and cultural traditions. When such a test is applied to children for whom English is not a first language, and who were not reared with urban white middle class values, it may not be clear whether the results reflect their ability to understand and work with numbers, or their familiarity with the English language and culture.

Active Math was developed as part of a doctoral research project to demonstrate that computer based numeracy assessment may be culturally less biased than written tests. The research was carried out in the Kimberley region of Western Australia. The results showed that children who score very poorly in written tests sometimes display a very sound ability to understand and work with numbers in a computer based test.

Active Math Java is a cutting edge number skills test, which abstracts from language and culture, and focuses on raw number processing skills.

Probabilistic versus Deterministic Models

A second issue with many state administered numeracy tests is that they evolved from a deterministic tradition, which is more applicable to Newtonian mechanics than to a psychometric test. Children are not machines, far less rocks drifting through space.

The basic deterministic model expresses a dependent variable y as a function of an independent variable x in the form:

y = f(x)

So if you can measure x, you can calculate y with exact certainty. The assumption behind many state administered numeracy tests is that the score returned by any student sitting the test is bears an exact relationship with their ability. So score of say 80% might be said to mean the student has A grade ability, 70% B grade ability and so on. In reality, this is a very na´ve view of the world.

Probabilistic models allow for the uncertainty which exists in the real world. So in a probabilistic model of a psychometric test, whether numeracy or anything else, the outcome of any interaction between a student and a test item is regarded as a random event, just like the tossing of a coin. This might be expressed mathematically as:

p{a} = f(δi,ζv)

Where p is the probability of an event a (such as the return of a incorrect answer), and this probability is a function of the difficulty of the item (δi) and the ability of the student (ζv).

If you tossed a coin, and it fell tails side up, you would not assume that there are tails on both sides. Similarly, if you ask a student to address a test item, and they return an incorrect answer, you should not assume that they do not have the ability to return a correct answer. They might have misread the question, or committed a random error.

Any teacher of mathematics knows that if you toss a coin 12 times, you will not always get 6 heads and 6 tails. They know that the observed frequency of events does not always reflect the theoretical probability of those events. They also know that the closeness of the estimate from observation with the theoretical probability bears an inverse relationship with the number of trials. They know that 10, 12, or 15 trials will often yield outcomes 10%, 20% or 30% wide of the mark, and that to get a more accurate estimate through experimentation, you have to increase the number of trials to 100, 200 or 1000.

Yet the Australian NAPLAN numeracy test has only 15 dichotomous items!

Matching item difficulty with student ability

The administrators of State numeracy tests face problems which go beyond whether or not a correct answer reflects fluent understanding or an incorrect answer reflects a total lack of understanding. They administer these tests in order to differentiate children (and teachers and schools). They therefore include in the tests a range of item difficulties, with the theory being that the most able students will return correct answers to all or most items while the less able students will return correct answers only on the easiest items.

Now it can be shown mathematically that the probability of two students being ranked correctly on a dichotomous item is always quite low, but it is highest when the ability of the students is closely matched with the difficulty of the items. I have given a simple mathematical argument in a blog, but as a matter of common sense, if the item is too easy, both students get it right, and cannot be ranked, and if the item is too hard, both students get it wrong, and cannot be ranked.

And because the designers of State numeracy tests set out to select items with a difficulty range spanning the ability range of all the students likely to sit the test, the optimum matching of ability with difficulty will only occur on at best two or three items for each student. In the blog example, when two students' ability is well matched with the difficulty of a test item, such that the probability of a correct answer is 55% for one student and 45% for the other, the probability of a correct ranking of the students is just 30%. So the similarity between the Australian Government NAPLAN numeracy test and a coin tossing experiment is a lot stronger than most people would care to admit.

Active Math Java represents a more powerful and reliable method of differentiating the number skills of students than the Australian Government NAPLAN numeracy test. A student (or teacher) may select the opening level of difficulty for a test, but after that the difficulty of the test items is automatically adjusted to match the ability of the student, so even if the test recorded only dichotomous item scores, it would make the fairest and most reliable comparison between students.

Boolean Raw Scores versus Scoring Rates

It can be shown mathematically that if in addition to raw scores you record the rate at which raw scores are returned, the probability of ranking students according to their ability is higher than if you record only raw scores. The idea of using the rates at which events happen to estimate student ability, was first suggested by Danish mathematician, Georg Rasch, in two chapters of his book, Probabilistic Models for Some Intelligence and Attainment Tests. I have discussed this in two published papers and a blog. Almost no other contemporary researchers have addressed these chapters.

Active Math Java records scoring rates and uses this metric to estimate student ability, along the lines originally proposed by Georg Rasch, in the seldom cited chapters his book. Active Math Java therefore applies probabilistic modelling in a totally unique manner. No other test, written or computer based, estimates student ability in this way. I have discussed the reliability of the methodology in a published paper.

Scoring Rates versus tightly timed tests

In the 1950's and 1960's certain practitioners used tightly timed tests to estimate student ability. This methodology was subsequently shown in the psychometric literature to be fraught with problems. I have discussed this at length in one of my published papers.

Active Math Java is not a tightly timed test. There is indeed no time limit. Item response times are quietly recorded in the background. In the test console, users are made aware of their accuracy, but their scoring rate is not displayed. Item response times are used by the computer to calculate item difficulty, and student performance is placed on a distribution curve with other students addressing items of similar difficulty.

Far from representing a return to the speed test methodology of the 1950's and 1960's, Active Math Java represents an entirely new approach to the estimation of numeracy.

So Have a Go

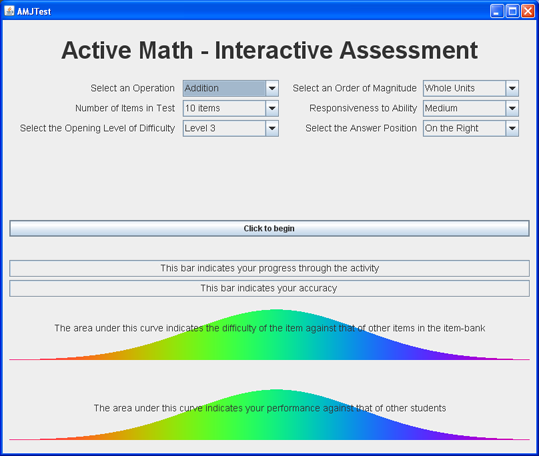

The picture below is a screenshot of Active Math Java. Click anywhere on the picture to have a go.